Data Centers 2026: Solving The Speed Squeeze

Timing Tensions Create Execution Risks, But Also Opportunities for Innovation

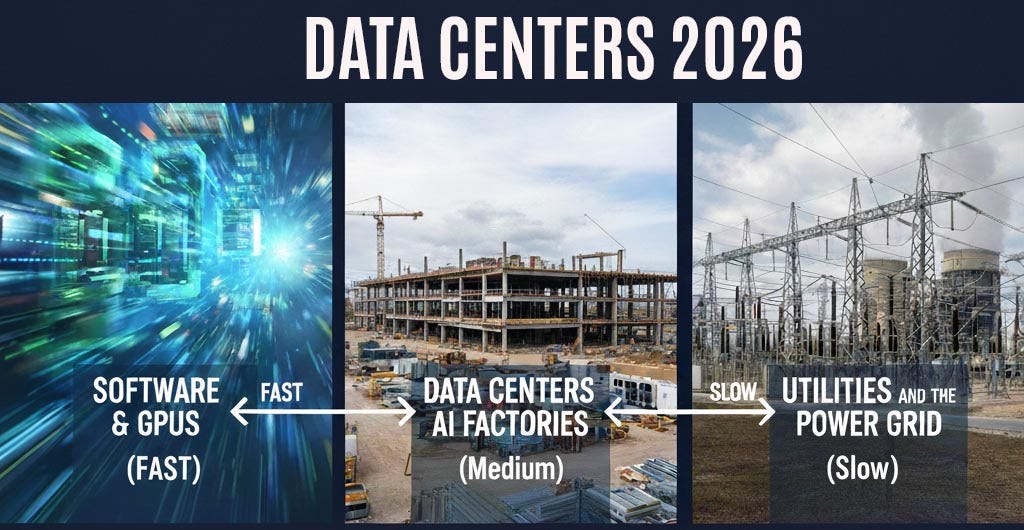

There are three layers of the AI economy, and they are moving at different speeds. In 2026, the big stories in the data center sector will be driven by how major players navigate the tension and timing between these layers.

The three layers:

Software and GPUs - The development of LLMs, agents and applications is moving extremely fast, driven by intense competition between hyperscale tech companies, well-funded startups, and governments. Meanwhile, NVIDIA has accelerated the pace of GPU delivery, dramatically accelerating the power of its chips, and the amount of power and cooling required to support them.

Data Centers and AI Factories - As the physical infrastructure that translates software into apps and services, data centers represent the execution layer that delivers AI to end users around the world. As large construction projects in a constrained supply chain, data centers take far longer to deploy than software, typically from 12 to 24 months, depending on designs.

Utilities and the Power Grid - Electricity is the lifeblood of every data center, and the key to delivering bits that make AI services a reality. But power grids are extremely complex systems, and utilities generally take years to add new generation and transmission capacity.

These three layers must collaborate for AI to thrive and prosper. As the middle layer, data centers experience the greatest pressure, sandwiched between customers that want GPUs yesterday, and utilities and grid operators who take years to add meaningful capacity.

This “speed squeeze” is being felt across the AI economy. It will define the data center sector in 2026, driving the trends and triumphs, as well as the bottlenecks and busts.

In this article we examine the pressures created by this speed gap, where it is being felt in the data center economy, and the opportunities for solutions.

One thing is clear: the data center sector is the crucial layer, controlling the ability for AI specialists to deliver services they believe will transform our economy and world. Not every project and strategy will succeed, and execution risk will come into sharp focus in 2026.

But the speed squeeze also creates a massive opportunity: Leveraging the huge demand for AI to lead the energy economy into a modernized future, enabling innovation, software and smart policy to accelerate the building blocks of our digital world.

Software and Algorithms: The Need for Speed

The AI development ecosystem is racing ahead, as companies like OpenAI and Anthropic seek competitive advantage to accelerate AI services.

This arms race is powered by hardware, and the key supplier is NVIDIA, whose GPU-based hardware systems have enabled rapid advances in AI development.

To maintain its lead over its chip rivals, NVIDIA has embraced a roadmap of annual upgrades to its GPU systems and architectures, leaving the data center and energy sectors struggling to keep pace.

“When it comes to GPUs, time is money,” said Dylan Patel, the founder of research firm SemiAnalysis.

At the Yotta 2025 conference, Patel explained why the nature of GPUs - their cost, performance-per-watt and lifespan - is driving intense pressure to monetize these capital-intense investments as soon as possible. He cited Meta’s decision to begin deploying GPU clusters in tents as evidence.

“It’s only one or two months quicker than building your own building, but the pure economics and the time to income generation are worth it,” said Patel. ”That time is all just money burning a hole in your pocket. There’s very few physical goods in the world that have margin like this. You’re looking for the best performing TCO. The performance lives up to it, creating captive workloads. The performance by NVIDIA is so far ahead.”

The “time to revenue” push left some cloud providers struggling to make GPU capacity available more quickly. This created an opportunity for neocloud providers optimized around GPU-as-a-Service, with NVIDIA as partners and often investors.

“The reasons speed of delivery matters is that it’s absolutely critical for our customers,” said Pradeep Vincent, EVP and Chief Technical Architect for Oracle Cloud Infrastructure. “It drives market leadership.”

Data Centers

The accelerated pace of AI development has huge implications for data center development and delivery. This was clearly reflected during the fall conference season by top executives from the largest hyperscale operators and equipment vendors. Here’s a sampling of their commentary:

”Data centers are being designed from the ground up by silicon,” said Rich Whitmore, the President and CEO of Motivair by Schneider Electric. “Meanwhile, silicon roadmaps are accelerating. There’s a dynamic ripple effect.” (Yotta 2025)

“We are completely redefining cloud infrastructure,” said Oracle’s Vincent. “I have never seen such a rapid change in such a short amount of time. It is a complete reimagination of everything.” (OCP Summit)

“We need dramatic changes in how we think about data center design,” said Partha Ranganathan, VP and Engineering Fellow at Google. “We need to systematically think about how do this holistically across all aspects of the data center.” (OCP Summit)

“Velocity is really important, and that’s a massive challenge for us,” said Dan Rabinovitsj, the VP, Data Center Infrastructure at Meta. “We are delivering supercomputers as though they’re a mass-produced product. We’re transforming infrastructure to be like a consumer electronics play.” (OCP Summit)

“I think we are at a very important inflection moment,” said Giordano Albertazzi, the CEO of Vertiv. “We’re all thinking about how we can change, and elevate it to giant industrial proportions.” (NVIDIA GTC Washington)

Cooling in the Crosshairs

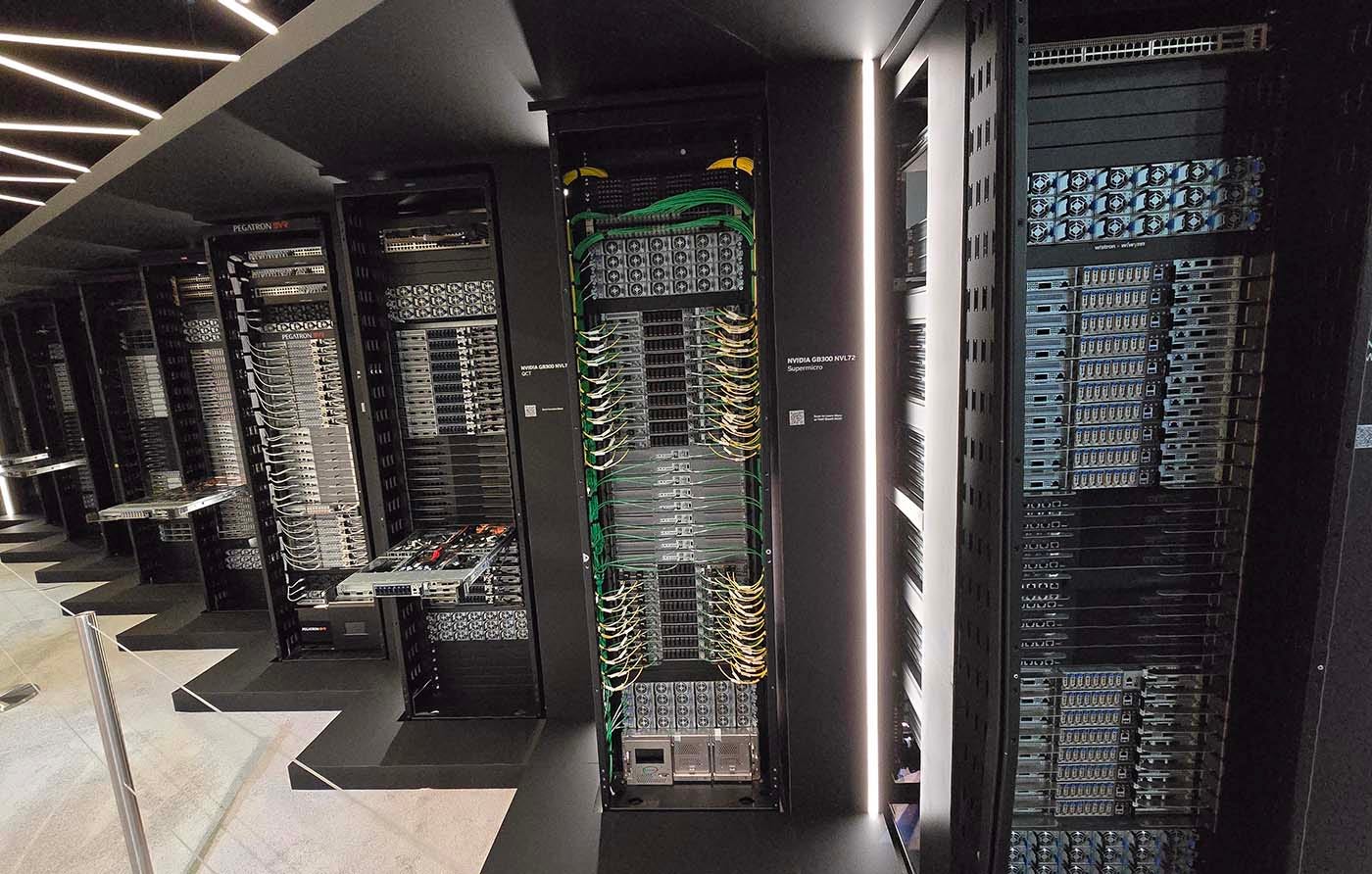

The most obvious candidate for disruption in 2026 is cooling. The AI boom is pushing data centers past the limits of traditional air cooling, driving a shift toward higher-density racks and liquid cooling solutions. As GPU-rich infrastructure becomes the backbone of modern compute, power densities have soared, with the most advanced GPU configurations now topping 125 kW per rack.

NVIDIA has just announced that its next-generation Vera Rubin GPU platform has entered production, meaning even denser systems will be deployed in data centers before the end of 2026.

This trend is fundamentally altering facility design and economics. High-density, liquid-cooled racks reduce the footprint needed per megawatt and unlock higher efficiency, which is critical as real estate, power, and sustainability constraints tighten. Facilities that can host advanced AI infrastructure are increasingly seen as strategic assets.

But that requires meaningful change. Here’s a look at what data center leaders see for the year ahead.